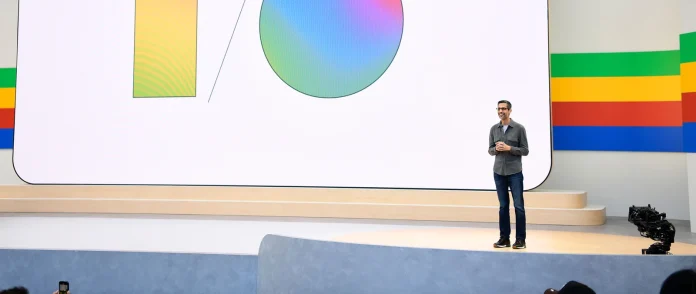

At the 2024 Google I/O, the company made a big splash about the updates to Google AI Overview, its artificial intelligence (AI) system for Google Search. The company seemed to indicate that the days of crawling the web for information were over. From the demo at least, it seemed as if Google’s AI would now tell us everything we needed to know, without ever having to leave the Google UI. On the face of it, this feature should have been a big win for consumers. In theory, at least, it was supposed to make getting information faster, easier, and more efficient.

Sadly though, since launch AI Overview has been anything but. The feature, a successor to Search Generative Experience (SGE) has been a complete failure, telling users to commit suicide, eat rocks, and add non-toxic glue to their homemade pizza. It has spawned a series of memes, with the company now reportedly scrambling to find a fix. Google spokesperson Meghann Farnsworth said to The Verge that the company is “taking swift action” to address certain queries. Of course, it is far from an admission of failure, but then again, it’s par for the course in tech. No company wants to admit it failed, especially when dealing with such unpredictable technology.

This isn’t the first time AI has been caught sharing downright misinformation, or fake responses. It is a pretty common occurrence amongst all major developers. While Google scrambles to find a fix, it is worth asking – is AI really ready for the mainstream? The answer is clearly no based on what we are currently seeing, but to understand why takes a whole lot of nuance.

AI’s Training Problem

AI systems like Google AI Overview are only as good as the data they are fed. This is where the issue starts. While the internet is good for many things, high-quality reliable information is not one of them. Genuine news sources and research papers only make up a minuscule percentage of the net and are largely hidden behind paywalls. This means that they are most inaccessible, both for general consumers and AI models. As a result, AI systems like Google AI Overview must crawl, synthesize, and output freely accessible data such as blogs and other SEO-optimized web pages.

Naturally, these sources aren’t a whole lot concerned with factual information. A large majority of these sources are built to capture ad revenue from page clicks, or people sharing opinions disguised as fact. Crawling these means Google AI Overview isn’t being trained on the best of the internet, but rather the worst of it. A good example of this is Reddit, which appears to be a major source for Google AI Overview. The much-memed glue to fix pizza response was tracked back to a Reddit user in a thread that is more than a decade old, and clearly a joke. Of course, that appears to be something Google did not take into account. It seems most of the false information presented by Google AI Overview can be tracked back to Reddit, Quora, or other internet forums where fact and fiction freely mix and mingle.

If we want AI to give us better results, we need to give it better data. There is no other workaround. The system works very much the same way we humans learn. If someone is force-fed a diet of misinformation like “immigrants are bad” or the “election was stolen” they are going to believe and share the same. This is a problem that everyone knows exists, but few are addressing. OpenAI seems to be an outlier here. In December 2023, the company announced a deal with publisher Axel Springer that will enable OpenAI’s ChatGPT to crawl the publisher’s content for answers. This means ChatGPT users will be getting fact-checked authenticated information from sites like POLITICO, Business Insider, and WELT, automatically raising the bar for the output quality.

Doing this isn’t easy, and thanks to the way the modern internet works, the number of high-quality sources is declining with each passing day. However, it is a necessary step to address the training problem that AI has. Google is clearly feeling the pressure not just from OpenAI, but other competitors to build a robust solution, but as a result, they have lived up to the Silicon Valley mantra “move fast and break things”. Google has literally broken search and is now scrambling to find an answer.

AI Needs Time, and Regulations

It appears Google is well aware of its shortcomings, restricting Google AI Overview to only a handful of key queries in select regions and languages. However, as the product starts rolling out more widely, this issue is only going to get worse. It is one thing to tell users in a tech-savvy and fairly educated nation like the USA to commit suicide, but it is another thing to do so in the developing world where users are slowly coming online and experiencing the internet’s power for the first time.

Google AI Overview will clearly get refined over time, but that does not solve the issue even if Google fixes the training data problem. Instead, the company and society in general must contend with the larger issue of a technology that is far from ready for the mainstream. The rapid growth of AI tools without safeguards has raised many questions, most of which don’t have answers. For one, what happens to all the publishers who rely on clicks and ad revenue when Google AI Overview takes away those clicks? For another, how can AI tools tell when someone is joking, or being genuine over written content? Understanding that nuance is vital to the way humans process and understand information. What about the AI answers that lead to actual physical harm? Will Google or other developers take the blame for such a situation?

That’s not to say AI is bad. At the I/O, Google did show off some genuinely good use cases for the technology, but at the moment those features just aren’t ready for everyone to use. At the core of the problem is that these companies don’t seem to be talking to each other, or indeed the governments to build a robust technology that can benefit everyone. At the moment, tech is in an arms race to build AI tools that draw users in no matter what the cost. With regulations almost non-existent, this is likely to continue until real-world harm is done. If Facebook could cause an insurrection at the US Capitol, just imagine the scale of damage AI can do.

AI tools are already being used to create deepfakes of politicians. Combine that with a search response that isn’t fact-checked or true, and you have an easy way to rile up a crowd into launching a protest at best, or civil war in a worst-case scenario. The world clearly isn’t ready for the chaos that AI will launch. The only way to prevent that is through regulations. In the meantime, we can only hope better sense prevails at Silicon Valley.

Please note that some links in this article are affiliate links. If you found the coverage helpful and decide to pick up the game, or anything else for your collection, through one of those links, we may earn a commission at no extra cost to you. We use this approach instead of filling Spawning Point with intrusive display ads, and rely on this support to keep the site online and fund future reviews, guides, comparisons and other in-depth gaming coverage. Thank you for supporting the site.